The Technological Tsunami

My relationship with AI is getting increasingly strange. Generalist AIs are still mostly useless, but narrow AIs continue to produce very impressive results. We have plenty of AIs that are better than any human at specific tasks like spotting cancer, but no AIs that can exercise common sense. We can synthesize terrifyingly realistic recreations of almost anyone’s voice, but they must be handheld by humans to produce consistent emotional inflection. We have self-driving cars that work fine at noon on a clear day with no construction, but an errant traffic cone makes them panic.

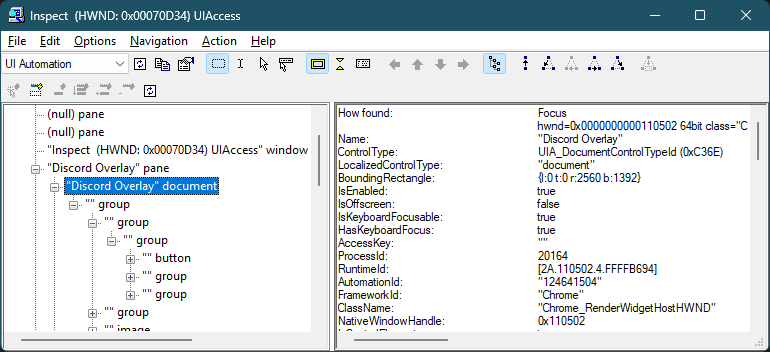

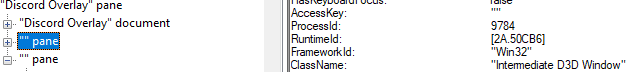

This is called “spiky intelligence”, and it is why ChatGPT can solve incredibly difficult math olympiad questions but struggle to push a button on a webpage. It seems to me that all these smart people with PhDs saying that AI will take over the workforce are convinced that, if AIs can continually get better at tackling difficult problems, eventually they’ll be able to train AIs that can also handle “easy” problems.

This is the exact same error that resulted in the second AI winter of the 90s - when researchers built expert systems could outperform humans in narrow situations, they simply assumed they would soon be able to outperform all humans in all situations. This, obviously, didn’t happen, but task-specific engines did emerge from this, and now it is a well-known fact that your phone has enough processing power to effortlessly destroy every single human chess grandmaster that has ever lived. We still play chess, though.

What worries me is that this kind of spiky intelligence, despite lacking general common sense, will still radically upend the economy in ways that are simply impossible for a human brain to anticipate, because these AIs are, by definition, alien intelligences that defy all human intuition. The coming AI revolution is dangerous not because it’s going to destroy the whole world if we get it wrong, but because it is almost impossible to anticipate in any meaningful way. No human is capable of accurately guessing what weirdly specific task an AI might find easy or extraordinarily difficult. It’s XKCD #1425 but randomized for every single task on the entire planet:

AI enthusiasts often like to talk about The Singularity, a point in time when technological progress accelerates beyond human understanding and thus the future beyond it becomes unknowable. To me, this is not a very useful thing to think about. After all, we’re already incapable of predicting what society will look like 10 years from now. What’s concerning is that we’re used to being able to prepare for the next 2-3 years (ignoring black swan events), and I anticipate that AI will cause economic chaos in ways we cannot predict, precisely because it will rapidly automate entire categories of human employment out of existence, randomly. What will happen when we start automating entire industries faster than we can retrain people? What happens when someone tries to migrate to another career only to have that career automated away the moment they graduate?

We already struggle to keep up with the rapid pace of change, and AI is about to automate everything even faster, in extremely unpredictable ways. There may be a moment when it becomes impossible to anticipate the trajectory of your career three months from now, without AGI ever happening. We don’t need a superintelligent godlike AI to fuck everything up, the extraordinarily powerful narrow AIs we’re working on right now can fuck up the whole global economy by themselves. This moment is the only “Singularity” that I care about - a sort of Technological Tsunami, when entire economic sectors are swept away by rapid automation so quickly that workers can’t course correct to a new field fast enough.

We have options, since we know that it will fuck up the economy, we just don’t know how. The easiest and most pragmatic solution is UBI, but this seems difficult to make happen in a society run by rich people who are largely rewarded by how evil they are. There are plenty of political groups that are pushing for these kinds of solutions, but global policymakers appear to have been captured by AI money, which is only concerned about the dangers of a mythological AGI superintelligence instead of the impending economic catastrophe that is already beginning to develop. Because of this, I think there is a real question over whether or not human society will survive the coming technological tsunami. Again, we don’t need to invent AGI to destroy ourselves. We didn’t need AI to build nukes.

With that said, some people seem to deny that AGI will ever happen, which is also clearly wrong, at least to me. There are many things that will eventually happen, based on our current understanding of physics (and assuming we don’t blow ourselves up). Eventually we’ll cure cancer. Eventually we’ll reverse aging. Eventually we’ll have cybernetic implants and androids. Eventually we’ll be able to upload human minds to a computer. Eventually we’ll build a general artificial intelligence capable of improving itself. It might take 10 years or 100 years or 1000 years, but these are all things that will almost certainly happen given enough time and effort, we just don’t know when. At the very least, if you build as much computational power as the entire combined brainpower of the human race, you’ll be able to brute-force a superintelligence of some kind, and we’d have better solved the alignment problem by then, or augmented ourselves enough to handle it.

At the same time, AI companies continue making wild extrapolations about the capabilities of AIs that simply don’t line up with real world performance. You cannot assume that an AI that scores better than all humans at every test will actually be good at anything other than taking tests, even if humans who score highly on those tests sometimes accomplish amazing things. I have a friend who was placed in Mensa at a very young age after scoring high on an IQ test. They complain that the only thing this group of very smart people do is argue about how to run the organization and what the latest cool puzzles are.

If you know you are actually much more intelligent than the statistical average, increase your humility. It is too easy to believe your own judgements, to get stuck in your own bullshit. Being smart does not make you wise. Wisdom comes from constantly doubting yourself, and questioning your own thoughts and beliefs. Never think, even for a moment, that you have 'settled' anything completely. It's okay to know you are bright, it is not okay to think that gives you any certainty or authority of understanding. — Chatoyance

The world’s smartest people are struggling to extrapolate the capabilities of an extremely spiky and utterly alien narrow intelligence, because it defies basic human intuition. Assuming an AI will be good at performing arbitrary tasks because it scored well on a test is the same kind of attribution error that happens with experts in a specific field - people will trust the expert’s opinion on something the expert has no experience with, like the economy, even though this almost never works out. This is such a persistent problem because highly intelligent people can invent plausible sounding arguments to support almost any position, and it can be exceedingly difficult to find the logical error in them. We are lucky that our current LLMs usually make egregious errors that are obviously wrong, instead of extremely subtle errors that would be almost impossible to detect.

We are in the middle of an AI revolution that will create new, extraordinarily powerful tools whose effects are almost impossible to predict. Instead of doing anything about the impending economic catastrophe, we are chasing AI safety hysteria and telling AGI superintelligence ghost stories that will likely not happen for decades, if not centuries. Otherwise intelligent people are convincing themselves that there’s no point worrying about the economy crashing if AGI makes humans irrelevant. We’re so busy trying to avoid flying too close to the sun we haven’t noticed a technological tsunami rising up beneath us, and if we continue ignoring it, we’ll drown before we even become airborne.